“To be ignorant of what occurred before you were born is to remain always a child.” — Cicero

It’s January 1933, the month of Hitler’s ascension as Chancellor of Germany, and a new morning for the Nazification of Christianity has arrived. So said Emanuel Hirsh, for whom there was no distinction between Christian belief and German Volk. So said Paul Althaus, for whom “the German Hour of the Christian” had arrived. And so said Gerhard Kittel, Germany’s leading Nazi Christian theologian and Senior Editor of the “Theological Dictionary of the New Testament.” In the 1920s, Kittel had expressed admiration for Jewish scholars of first-century Palestine, remarking in 1926 that everything Christ said could be found in the Talmud. But now it is 1933, and in his lecture, “Die Judenfrage,” Kittel clarified that only Jews of the first century were admirable. Contemporary Jews, on the other hand, could be tolerated temporarily as guests in Germany, but only until they formed their own non-German Volk. Kittel would serve 17 months in prison after the war.

For the theologians and countless other Germans, Hitler was the new Luther, indeed, as Dietrich Echart proclaimed long before Hitler’s ascension to power, the Messiah.[i] The Nazi Christian movement, abetted by the establishment of the Protestant Reich Church (Evangelische Reichskirche) in 1933, grew by leaps and bounds. The Evangelicals led the charge, coalescing into a fighting church of young white anti-Semites aligned with Nazism and celebrating the Führerprinzip, the leader principle. With Hitler at the helm, the dissolute Weimar Republic would be supplanted by a revitalized Germany unrestrained by the Treaty of Versailles. Crippling inflation would give way to a robust economy, safeguarded by a reconstituted German army. German Jews, who poisoned the Volk and threatened the campaign of rebuilding, would be eliminated. In a word, Germany would be made great again.

This cartoonish image, “the seed of peace, not dragon’s teeth,” appeared in the magazine Kladderadatsch on March 22, 1936. The angel behind Hitler blows its horn to herald Hitler’s arrival as the new savior.

The Führerprinzip was no less salient in Italy, where Mussolini, appointed Prime Minister by King Victor Emmanuel III in 1922, was an inspiration to the young Hitler. Three years earlier, he founded Italy’s National Fascist Party, and, as PM, lost no time in transforming the liberal Italian state into a brutal fascist dictatorship. From the beginning, his followers hailed him as Il Duce or, in Latin, Dux, their supreme leader. No sooner did Mussolini come to power than Piedappio, the village of his birth, became the site of daily pilgrimages, with followers visiting the family crypt and paying homage to the mother, who gave birth to Il Duce in 1883.[ii] By 1926, Italians universally embraced him as, in the phrase later used by Pope Pius XI, a “man of Providence.”[iii]

In 1937, on the eve of Il Duce’s visit to Sicily, one excited Sicilian exclaimed, “We await our father, the Messiah. He is coming to visit his flock to instill faith.” He was not alone in his veneration. Pope Pius XI, ever grateful to Mussolini for his key role in signing the Lateran Pact of 1929, and convinced the Generalissimo would restore Catholicism’s privileged role in Italian life, called Mussolini the “man whom Providence has sent us.” And Mussolini, a fervent socialist and anti-cleric until 1919, came under his own spell: he believed he was indeed the Chosen One, destined to effect Italy’s spiritual rebirth under the banner of fascism.

_________________________

“And on June 14, 1946, God looked down on his planned paradise and said, ‘I need a caretaker.’ So God gave us Trump.”[iv]

So intones the narrator of “God Made Trump,” the video produced by the Dilley Meme Team that has invaded the internet in recent weeks. Trump, mindful of his divine calling, saw fit to post the video on his social media platform and has broadcast it at campaign events. What can one say of a Christian god who, in His wisdom, chooses as messianic caretaker a convicted sexual predator whose life is suffused with corruption, misanthropy, and criminality? Obviously, Evangelical Christians, who hail Trump’s ordained arrival, are content to brush aside matters of character and behavior. God may well choose as caretaker a man who has lied, cheated, and intimidated his way to power, a man whose pretense of religiosity was openly ridiculed by his own Evangelical vice-president. During his time in office, Trump’s sole visit to St. John’s Episcopal Church, be it noted, was a crass photo-op, with police using tear gas and rubber bullets to remove peaceful protestors from Lafayette Square, so that Trump’s walk from the White House, to the photographers’ delight, would be straight and clear.[v]

But manifest irreligiosity has not mattered. For a majority of American Evangelical Christians, immoral acts in personal life do not compromise a politician in the public sphere, where ethical sensibilities may, mysteriously, be revitalized.[vi] But in Trump’s case the very distinction is moot: Moral depravity and criminal behavior have been foundational to both spheres.

But wait – haven’t we been here before? Wasn’t Mussolini, in his biographer’s words, “God’s chosen instrument of Italy’s spiritual rebirth”?[vii] And wasn’t Spain’s Franco the darling of the Roman Catholic Church, God’s warrior for ridding the nation of godless Communists, tearing down the Second Republic, and restoring the Church’s privileged role in Spanish life? After the Spanish Civil War (1936-1939), didn’t Pope Pius XII himself, staunch (if silent) supporter of Franco throughout the war, bless Franco as Defender of the Catholic Faith? In so doing, the Pope managed to overlook the 100,000 Republicans executed by Franco’s Fascist Nationalists during the war, along with an additional 50,000 put to death at war’s end in 1939. Right into the 1960s, when the Vatican, under Pope Paul VI, began to retreat from Franco, he continued to cloak himself in the mantle of Catholic chosen-ness, appearing at national events, such as Leon’s Eucharist Congress of 1964, with the golden chain and cross of the Vatican’s Supreme Order of Christ proudly draped over his army uniform. Perhaps the National Association of Evangelicals will confer on the irreligious Trump a wearable decoration that consecrates his status as America’s Messiah.[viii]

Back in Italy, Mussolini’s charge, throughout the 1930s, was to make Italy great again, to forge a New Roman Empire that would dominate the Mediterranean and, through a vast colonial empire, become an international power of the first order. The aim of his regime, he proclaimed in open-air speeches broadcast over radio, was “to make Italy great, respected, and feared.”[ix] Now, of course, Christians are exhorted to embrace Donald Trump as the divine caretaker who will “fight the Marxists” in our midst. So avers the narrator of “God Made Trump.” “Donald Trump carries the prophetic seal of the calling of God,” chimes in cultist pastor Shane Vaughn. “Donald Trump is the Messiah of America.”[x] For Trump, of course, it is the “radical left,” a jumble of Communists, Marxists, atheists, and Democratic appointees that threaten him, and by implication, the nation. They have all coalesced into a mythical “deep state” that, in some imponderable way, stole the 2020 presidential election from him and led to the criminal indictments and civil suits in which he is ensnared. His plaint is about as convincing as Hitler’s insistence that, in some equally imponderable way, international Jewish business interests conspired to defeat Germany in World War I and then impose the ruinous Versailles Treaty.

In America today, we are long past George Santayana’s cautionary words of 1905, “Those who cannot remember the past are condemned to repeat it.”[xi] For a disconcertingly large segment of the American electorate, including a majority of surveyed Christian Evangelicals, the past has indeed gone unremembered, and a new Chosen One has appeared on the scene. Throughout the 20th century, the divination of autocratic political leaders has had horrendous consequences. To indulge in it yet again, and now in support of a psychopathic miscreant, is to proffer an apotheosis that is, quite literally, mind-less.

Trump was a child of privilege who never outgrew the status of a privileged child. We are well advised to heed Cicero’s admonition, to learn what occurred before us, and to push beyond Trump-like arrested development that consigns us to permanent childhood – a childhood that, by encouraging the sacralization of political figures, is pernicious and potentially disastrous.

___________________

My great thanks to my gifted wife, Deane Rand Stepansky, for her help and support.

[i] See, for example, David Redles, Hitler’s Millennial Reich: Apocalyptic Belief and the Search for Salvation (NY: New York Univ. Press, 2008), chapter 4, “Hitler as Messiah.”

[ii] Piedappio, liberated from fascism in 1944, remains a Mussolini pilgrim site to this day. Piedappio Tricola, its souvenir shop, “teeming with fascist memorabilia, including copies of Adolf Hitler’s Mein Kampf, has always done brisk trade.” “Pilgrims to Mussolini’s birthplace pray that new PM will resurrect a far-right Italy,” The Guardian, October 23, 2022 (https://www.theguardian.com/world/2022/oct/23/pilgrims-to-mussolinis-birthplace-pray-that-new-pm-will-resurrect-a-far-right-italy).

[iii] John Whittam, “Mussolini and the Cult of the Leader,” New Perspective, 3(3), March 1998 (http://www.users.globalnet.co.uk/~semp/mussolini2.htm).

[iv] “God Made Trump” (https://www.youtube.com/watch?v=lIYQfyA_1Hc).

[v] “Trump shares bizarre video declaring ‘God made Trump,’ suggesting he is embracing a messianic image” (https://www.businessinsider.com/trump-shares-bizarre-video-declaring-god-made-trump-2024-1); “‘He did not pray’: Fallout grows from Trump’s photo-op at St. John’s Church” (https://www.npr.org/2020/06/02/867705160/he-did-not-pray-fallout-grows-from-trump-s-photo-op-at-st-john-s-church).

[vi] In 2010, according to a public opinion poll conducted by the Public Religion Research Institute, only three in 10 American Evangelicals believed immoral acts in personal life did not disqualify a person from holding high public office; in 2016, following Trump’s election to the Presidency, this percentage had increased to 72%. What, pray tell, is the percentage now? These statistics come from Robert Jones president of PPRI. “A video making the rounds online depicts Trump as a Messiah-like figure” (https://www.npr.org/2024/01/26/1227070827/a-video-making-the-rounds-online-depicts-trump-as-a-messiah-like-figure).

[vii] See Denis Mack Smith, Mussolini (Essex, UK: Phoenix, 2002), passim.

[viii] For the key facts of the Spanish Civil War, see the Holocaust Encyclopedia (https://encyclopedia.ushmm.org/content/en/article/spanish-civil-war#:~:text=During%20the%20war%20itself%2C%20100%2C000,forms%20of%20discrimination%20and%20punishment). On the Church’s measured retreat from Franco, and Franco’s appearance at the Eucharist Congress in Leon of July, 1964, see “Franco Stresses Spain’s Ties to Church,” New York Times, July 13, 1964.

[ix] See, inter alia, Stephen Gundle, Christopher Duggan, & Guiliana Pieri, eds., The Cult of the Duce: Mussolini and the Italians (NY: Manchester University Press, 2013).

[x] Vaughn’s declaration of Trump’s divinity is quoted on various internet sites, e.g., https://twitter.com/RightWingWatch/status/1610661508601487363.

[xi] In 1948, Winston Churchill recurred to Santayana’s warning even more tersely: “Those that fail to learn from history are doomed to repeat it.”

Copyright © 2024 by Paul E. Stepansky. All rights reserved. The author kindly requests that educators using his blog essays in their courses and seminars let him know via info[at]keynote-books.com.

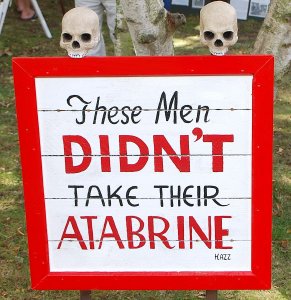

to protect them from these infectious diseases during the earliest years of life? Is the demographic fact that, owing to vaccination and other public health measures, life expectancy in the U.S. has increased from 47 in 1900 to 77 in 2021 also based on junk data? In my essay,

to protect them from these infectious diseases during the earliest years of life? Is the demographic fact that, owing to vaccination and other public health measures, life expectancy in the U.S. has increased from 47 in 1900 to 77 in 2021 also based on junk data? In my essay,